LASER Learning a Latent Action Space for Efficient Reinforcement Learning

LASER

Learning a Latent Action Space for Efficient Reinforcement Learning

Arthur Allshire, Roberto Martín-Martín, Charles Lin, Shawn Manuel, Silvio Savarese, Animesh Garg

| Paper | Video |

Abstract

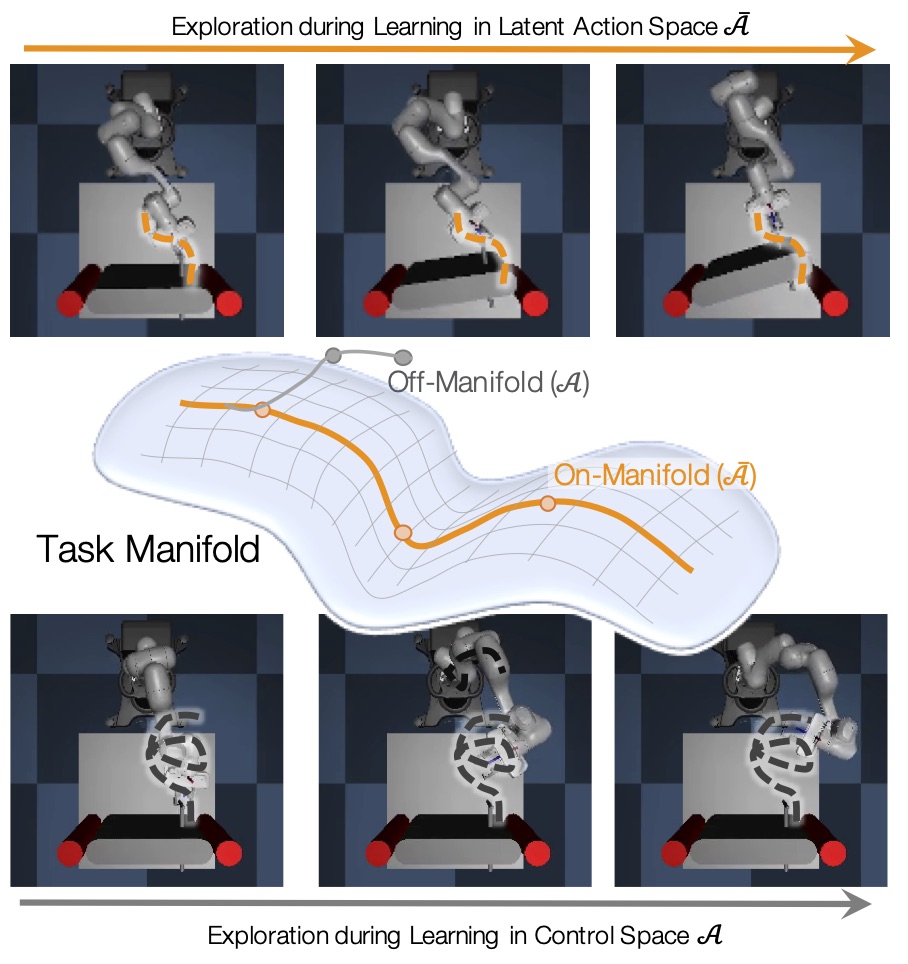

The process of learning a manipulation task depends strongly on the action space used for exploration: posed in the incorrect action space, solving a task with reinforcement learning can be drastically inefficient. Additionally, similar tasks or instances of the same task-family impose latent manifold constraints on the effective action space. Combining these two insights we present LASER, a method to learn latent action spaces for efficient reinforcement learning. LASER factorizes the original learning problem into two sub-problems, namely action space learning and policy learning in the new action space.

LASER leverages data from several similar manipulation task instances, either from an offline expert or online during policy learning, and learns from these trajectories a map the original action space to a latent action space. LASER is trained as a variational encoder-decoder model which encodes the raw actions into a disentangled latent space while maintaining both state conditioned reconstruction as well as latent space dynamic consistency. We evaluate LASER on two robotic tasks in simulation, comparing with reinforcement learning baselines, as well as LASER ablations. We also analyze the benefit of policy learning in the new generated latent action space, showing improved sample efficiency, thanks to a better alignment of the action space to the task space via LASER, as we observe with visualizations of the action space manifold learned by LASER for contact rich tasks.

Acknowledgements

This project is supported by the following.