Research Projects

We aim to build algorithms for perceptual representations learned by and for interaction, causal understanding of mechanisms, and physically-grounded reasoning in practical settings. An emblematic north star is to enable an autonomous robot to watch an instructional video, or a set of these videos, and then learn a policy to execute the task in a new setting. We build both algorithms and systems that have a broad range of applications in different domains in robot autonomy. PAIR group blends ideas in Causality, Perception, and Reinforcement Learning towards this vision.

As a group we pride ourselves on building and applying learning algorithms on different real robot platforms.

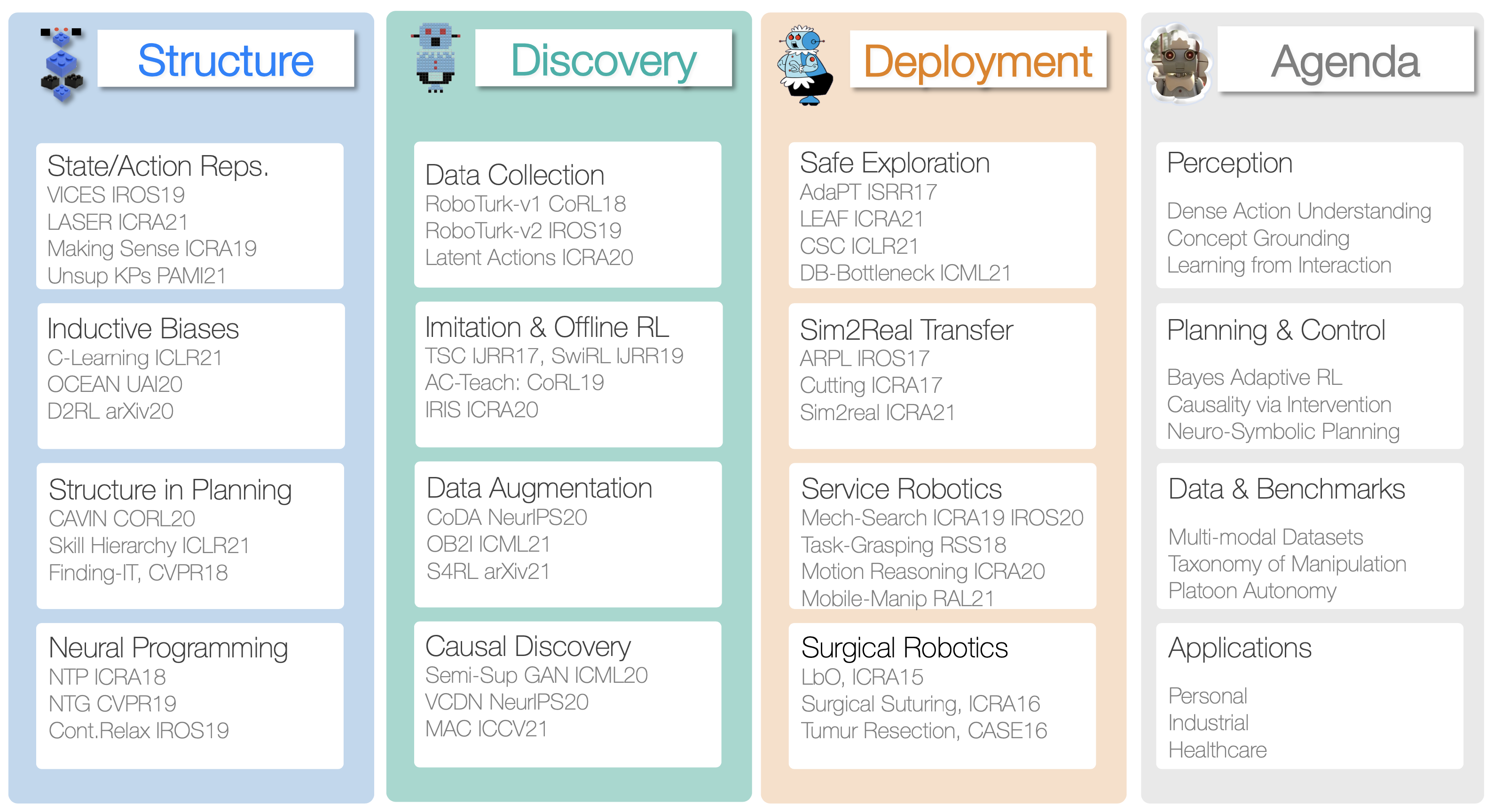

1. Generalizable Representations in RL for Robotics

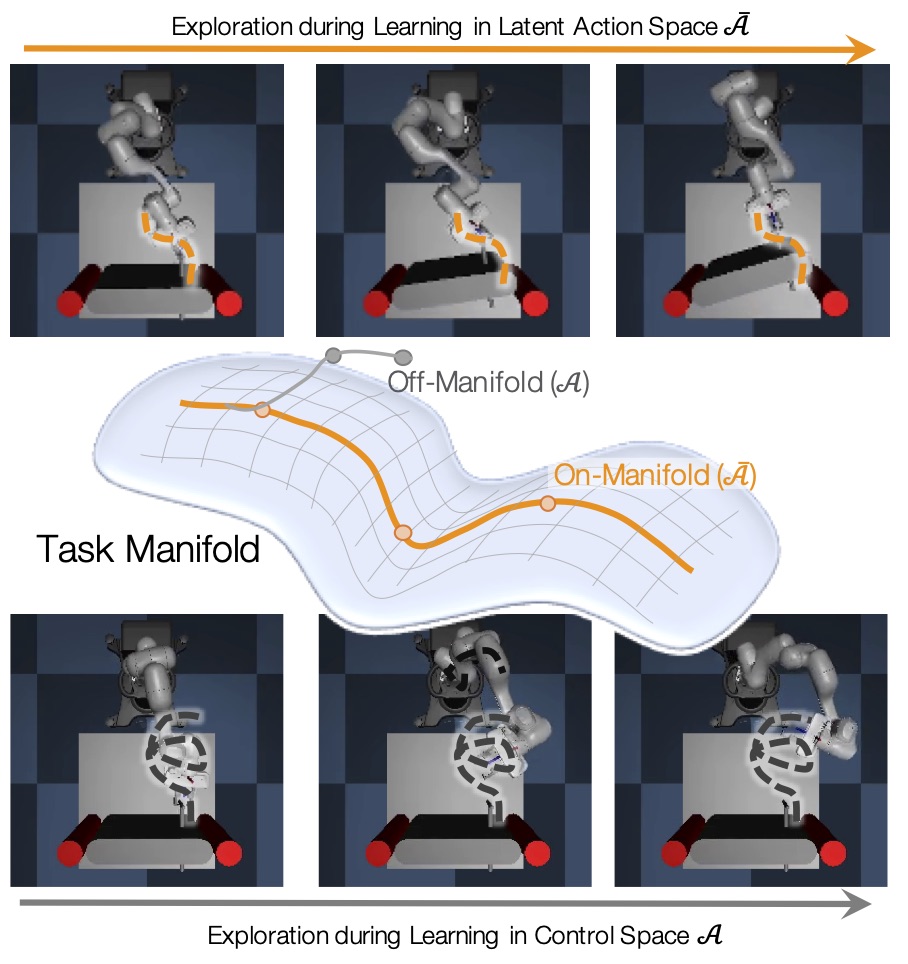

A key focus of our work is to understand the role of representations in RL towards efficiency and generalization in skill acquisition. RL is mainly composed of State Space (Input), Action space (Output), a Learning Rule, and Policy (or value) model.

Structured biases upend contemporary methods in all four dimensions, pointing to a need for deeper analysis of representations in RL.

- States: Unsupervised Keypoints, Making Sense of Touch and Vision

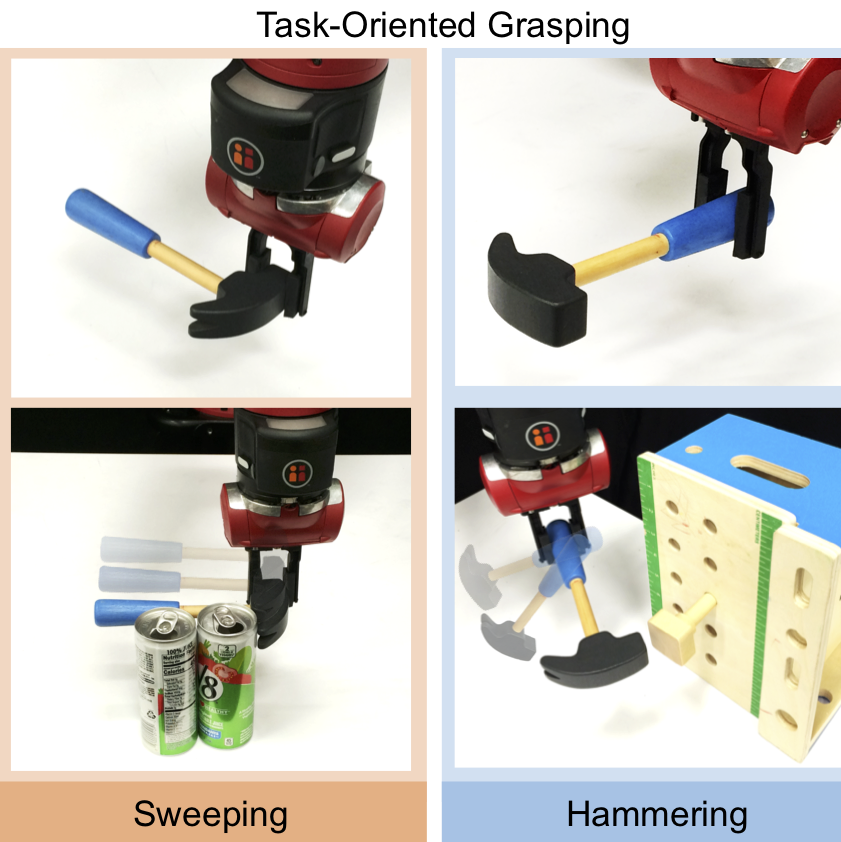

- Objects Representations: Task Oriented Grasping, Affordance for Tool-Use

- Actions: VICES, LASER, GliDE

- Algorithms: C-Learning, LEAF, OCEAN

- Architectures: Deep-Dense nets in RL

- 3D Vision: Object and Scene representations for manipulation.

- Perceptual Concept Learning

- Geomteric Deep Learning for discovery of symmetries

2. Causal Discovery and Inference in Robotics

Causal understanding is one of key pillars of my current and future agenda. A simulator is a generative world model, and similarly follows a system of structural mechanisms. However, model learning focuses solely on statistical dependence, while Causal Models go beyond it to build representations that support intervention, planning, and modular reasoning. These methods provide a concrete step towards bridging vision and robotics through sub-goal inference and counterfactual imagination.

- Disentangled Generative Models: Semi-Supervised StyleGAN, Unsupervised Keypoints

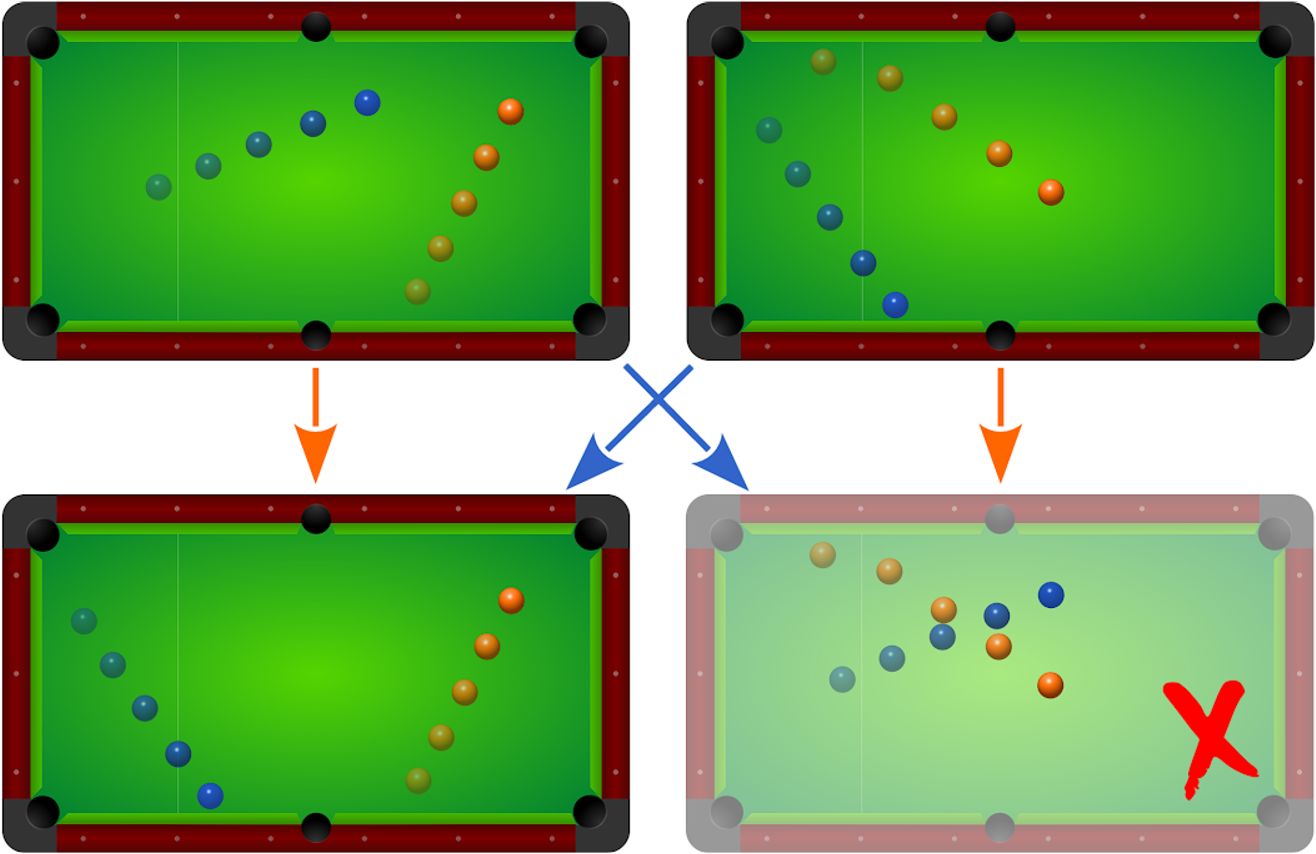

- Causal Factor Graphs: Visual Causal Discovery

- Instruction Guided Counterfactual Generation: Action Concepts

3. Crowd-Scale Robot Learning with Imitation/Offline/Batch RL

Data-driven methods help RL in exploration and reward specification. Robot learning, however, is limited by modest-sized real data. Access to data brings new algorithmic opportunities to robotics, as it did in vision and language. However, it also poses challenges due to static nature of data and covariate shifts.

- Scalable Teleoperation with Roboturk: Roboturk-v1, Roboturk-v2

- Imitation Learning: AC-Teach, LbW, Goal-based Imitation

- Offline/Batch Policy Learning and Causal Data Augmengation: IRIS, CoDA, S4RL

- Safe Transfer to Real Systems: Adversarial Policy Learning, Adaptive Polict Transfer, Conservative Safety Critics

4. Structured Biases for Hierarchical Planning

Procedural reasoning, such as in robotics, needs both skills and their structured composition for interaction planning towards a higher-order objective. However, manual composition of skills via a finite state-machine design is both tedious and unscalable. Thus the need for inductive bias is intensified for cognitive reasoning. I have developed imitation guided policy learning in abstract spaces for hierarchically structure tasks.

- Neural Planning Modules for One-Shot Imitation: NTP, NTG, Continuous Symbolic Planner

- Task Structure Representations: Transition State Clustering, TSC-DL, SWIRL

- Learning from Videos: Finding-it

- Dynamics with Latent Hierarchical Structure: CAVIN, Skill Hierarchies

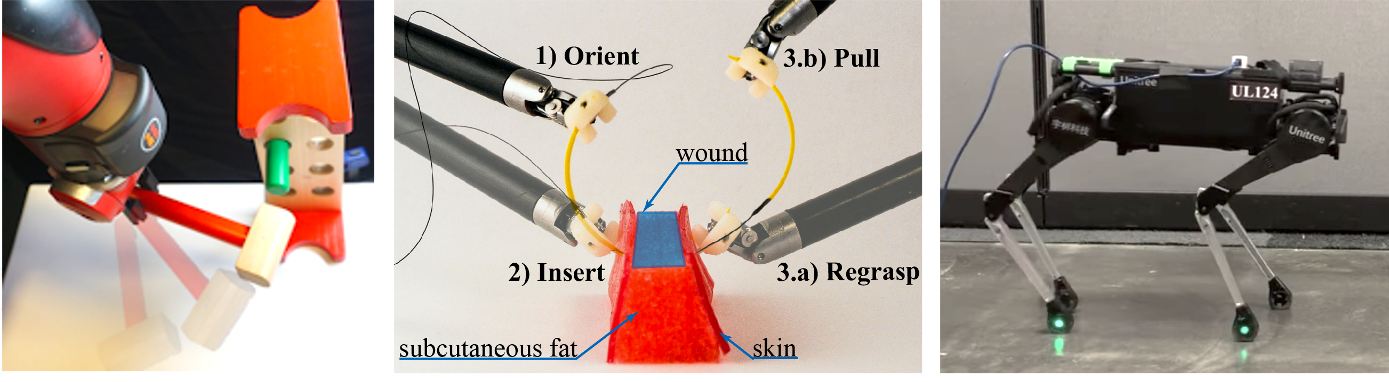

5. Applications to Real Robot Systems

The algorithmic ideas have been motivated by problems in mobility and manipulation in robotics, and have been evaluated on various physical robot platforms.

- Personal & Service Robotics: Tool Use, Task Planning, Assembly, Pick & place, Laundry Layout, Assistive Teleoperation, Mechanical Search

- Surgical & Healthcare: Debridement, Suturing, Cutting, Extraction, Radiotherapy

- Legged Robotics: Contact Planning, Domain Randomization